Abstract

We present a novel LLM-based pipeline for creating contextual descriptions of human body poses in images using only auxiliary attributes. This approach facilitates the creation of the MPII Pose Descriptions dataset, which includes natural language annotations for 17,367 images containing people engaged in 410 distinct activities. We demonstrate the effectiveness of our pose descriptions in enabling zero-shot human-centric classification using CLIP. Moreover, we introduce the FocusCLIP framework, which incorporates Subject-Focused Attention (SFA) in CLIP for improved text-to-image alignment. Our models were pretrained on the MPII Pose Descriptions dataset and their zero-shot performance was evaluated on five unseen datasets covering three tasks. FocusCLIP outperformed the baseline CLIP model, achieving an average accuracy increase of 8.61% (33.65% compared to CLIP's 25.04%). Notably, our approach yielded improvements of 3.98% in activity recognition, 14.78% in age classification, and 7.06% in emotion recognition. These results highlight the potential of integrating detailed pose descriptions and subject-level guidance into general pretraining frameworks for enhanced performance in downstream tasks.

🚧 Preliminary Work

Conducted in late 2023, this represents an early exploration of LLM-driven human pose descriptions—before the advent of multimodal LLMs. It serves as a proof of concept: embedding keypoint coordinates into text prompts to generate natural language annotations. Results are shared here as a preliminary study.

1. Unimodal LLM-Based Pipeline

Structured LLM prompts that directly convert 2D keypoint cooordinates into rich, natural-language pose descriptions.

2. MPII Pose Descriptions

Dataset of 17,367 images & 410 activities, each annotated with contextual body pose descriptions.

3. FocusCLIP Framework

Subject-Focused Attention in CLIP, boosting zero-shot accuracy by +8.61 pp on human-centric tasks.

MPII Pose Descriptions Dataset

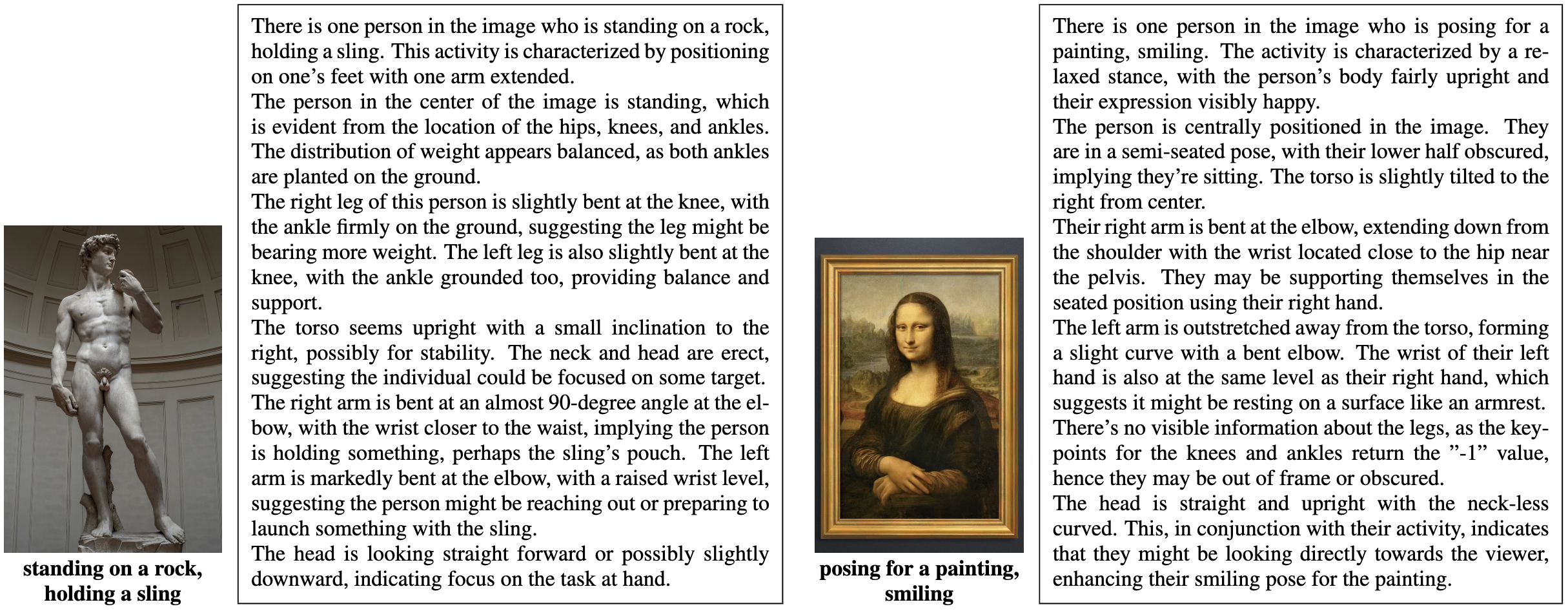

Sample pose descriptions generated by the LLM pipeline are shown in the figure below, which is taken from the paper.

Sample Pose Description. We use our pipeline to generate pose descriptions for two famous artworks, the Statue of David and the

Mona Lisa. The LLM was provided body keypoints obtained using an off-the-shelf pose estimation network and manual activity labels.

Sample Pose Description. We use our pipeline to generate pose descriptions for two famous artworks, the Statue of David and the

Mona Lisa. The LLM was provided body keypoints obtained using an off-the-shelf pose estimation network and manual activity labels.

Dataset Viewer

Dataset Details

Generation Method

- Extract 2D keypoint coordinates from MPII images.

- Embed joint locations and activity labels into structured LLM prompts.

- Use GPT-3.5/GPT-4 to produce free-form descriptions of body posture and action.

Contents

- 17,367 human-centric images.

- 410 distinct activity categories (e.g., running, sitting, playing instrument).

- Up to four varied natural-language descriptions per image, capturing pose nuances.

- Available via Hugging Face: MPII Pose Descriptions Dataset

Key Stats

- Images: 17,367

- Activities: 410

- CLS Tasks: Activity, Age, Emotion

- Zero-Shot Gain: +8.61 pp avg.

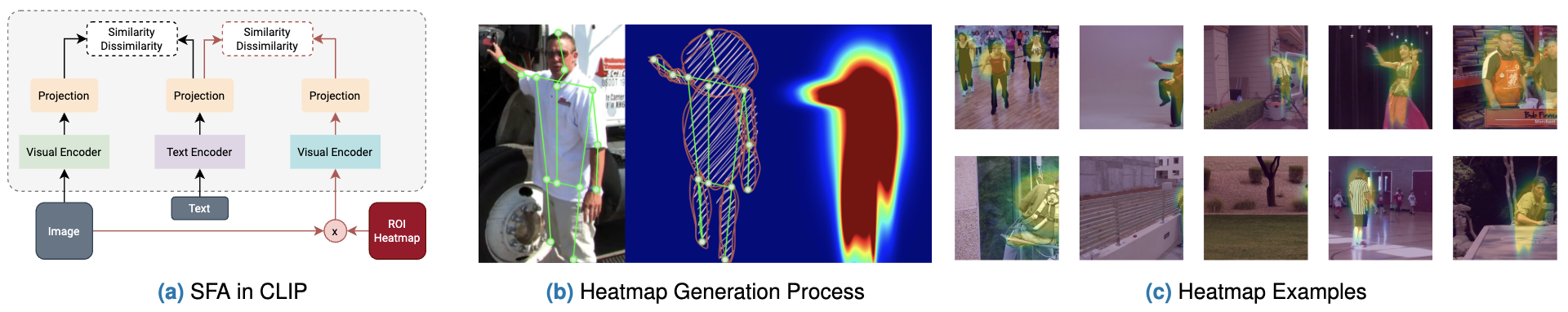

FocusCLIP Framework

The FocusCLIP framework. (a) We add SFA in CLIP, highlighting important areas for downstream tasks, and train the model with a dual contrastive loss to learn a joint embedding space between the raw image, heatmap-highlighted image, and text. The text consists of pose descriptions for people in images written by an LLM. (b) The heatmap generation process uses keypoints, which involves grouping keypoints into body parts and blending them together using Gaussian ellipses to produce the heatmaps. (c) This technique effectively highlights individuals within diverse environments.